|

|

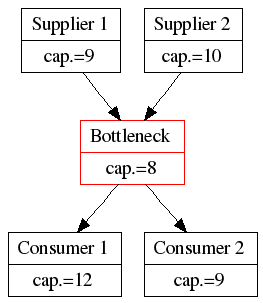

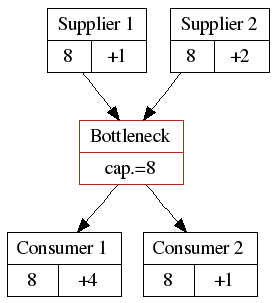

If you believe the Theory of Constraints, you know a few things:

- the throughput of your system is determined by the throughput of your bottleneck

- you should keep the bottleneck working at 100%

- you should keep the non-bottlenecks working at less than 100%, so that they don’t generate wasteful work-in-progress or are unable to cope with fluctuations.

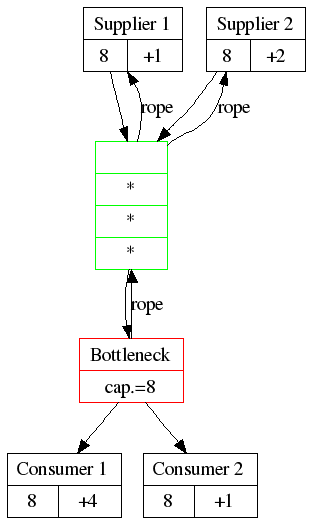

How do the non-bottlenecks know how fast they should work, without having a global coordination? By using the “Drum-Buffer-Rope” technique.

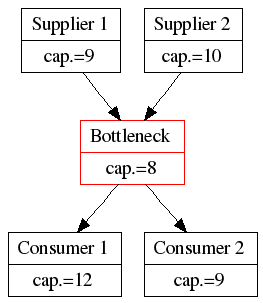

The system

The Drum

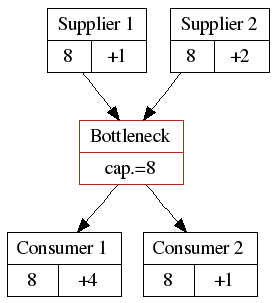

The bottleneck determines the speed at which the system works. This bottleneck can produce 8 items per unit of time. Therefore the bottleneck acts as a drum, beating the rhythm that the whole system should follow, like a drummer on a galley slave or the drummer of a marching band. The system has a rhythm of 8, so all participants in the system should follow the rhythm. This is one way to Level out the load. In Lean Thinking, this is called the “Takt Time”.

So, now we have everybody working at 8 items per unit of time.

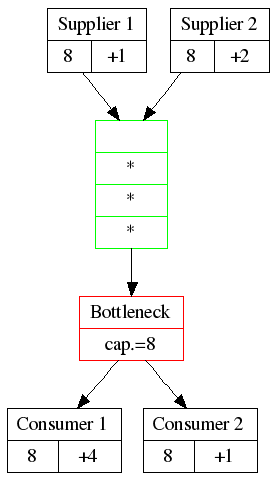

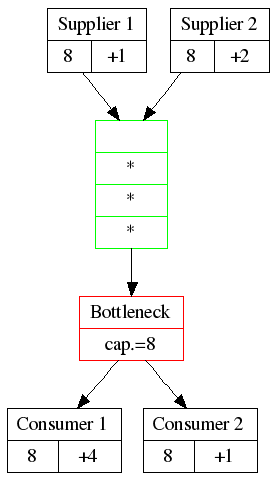

The Buffer

But what if supplier 1 or 2 can’t produce exactly 8 items? The bottleneck will be starved and we lose precious throughput. That can’t be allowed to happen. Therefore, put a buffer of raw material in front of the bottleneck, so that it never has to wait.

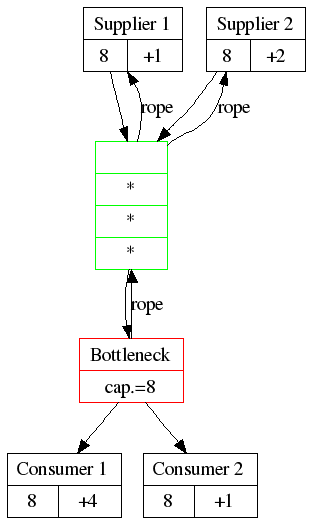

The Rope

Supplier 1 and 2 won’t always be perfectly in lockstep with the Bottleneck. We don’t want the buffer to become too big or too full, because then we have a lot of work-in-progress (and all the problems of cost, quality and overhead that generates). The bottleneck needs to limit the size of the buffer. Therfore, let the bottleneck signal to its suppliers when it has consumed an item and therefore should receive one item. Each time the bottleneck has consumed something, they ‘pull a cord’, which signals that a fresh item should be delivered. This is ‘Pull Scheduling‘

Spare capacity

We can see how suppliers and consumers need a bit of spare capacity:

- If the suppliers don’t have spare capacity, they will never be able to re-fill the buffer if something goes wrong

- If the consumers don’t have spare capacity, they won’t be able to handle fluctuations in output from the bottleneck and may thus delay processing bottleneck output.

Only Consumer 1 has a lot of spare capacity (+4). They might spend some of that capacity on other work, as long as they keep enough spare capacity to deal with the bottleneck’s output.

What does all that have to do with writing software?

Well, an IT project is a system that transforms ideas into running/useful software, where different resources perform some transformation steps. Each resource has a certain capacity and the steps are dependent on each other. And there’s certainly variation. Look quite a bit like the systems above.

Let’s see if we can apply Drum-Buffer-Rope to software development…

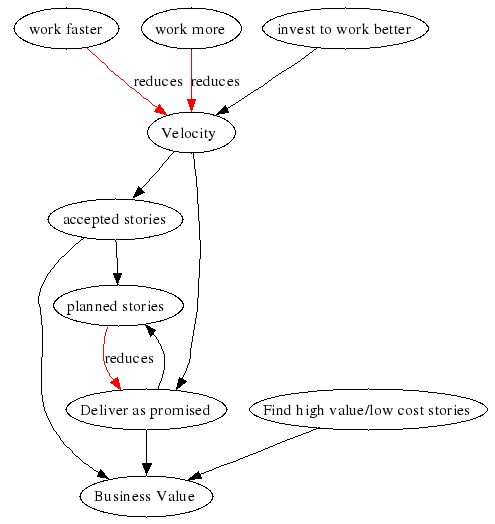

Faster. Faster, faster, faster! as David Byrne sings manically in “Air”.

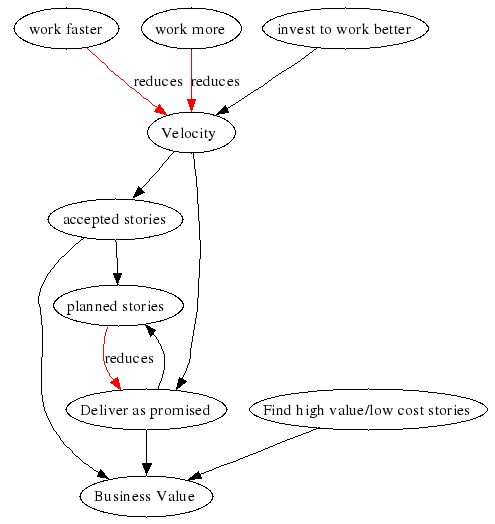

In previous entries we saw how an XP team is doomed to be on a mad treadmill that spins ever faster: the team commits to delivering as much work as the previous release (the team’s velocity). They try to improve their velocity to deliver a little more than promised. If they succeed, their velocity has gone up and they commit to delivering that much in the next release. And they try to improve their velocity again… And on and on.

Does it ever stop? If they fail to deliver all the promised stories by the end of the release, their velocity is lowered: only finished and accepted stories are counted. Next iteration they commit to a little less work, which should give them some time to analyze what happened and try some improvements. If they planned by business value only the lowest value stories are not delivered; the value of the release will still be near the expected value.

In my experience, working faster or working more hours does not increase the number of stories that get accepted. On the contrary, it is the surest way to reduce your velocity.

Use Velocity to evaluate investments

How yo you increase velocity? By investing in working better:

- Writing unit tests to design better and to guard against regressions

- Refactoring code to make the design better and the code easier to change

- Pairing to spread knowledge, techniques and conventions

- Regular reflection in “Retrospectives” or “Kaizen events to review and improve the process, tools and techniques

- Holding reviews, either formally or by pairing to improve code quality and spread knowledge

- Writing automated acceptance and performance tests

- Involving customers in defining and testing the software

- Buying better tools (but only reliable, thoroughly tested technology, that serves your people and process)

- Harvesting usable code

- Go get some training, go to a seminar, go to a conference

- Do something fun, relax

- Perform an experiment, try out a wacky idea

- …

And how do you know you investment worked? Your velocity goes up. That’s one more reason to have short releases: you get quick feedback on whether your investment. If it doesn’t work, stop and try something else.

Micro-investements

Typical for agile methods is that they use micro-investments: make a small investment, see what the effect is, decide on your next investment. Unit testing, pairing, refactoring, acceptance testing… require some daily effort, some discipline. Regular retrospectives evaluate the results of the investements and decide where to invest next. Thus, if you make a “wrong” investment, you haven’t lost much effort and you at least gain some knowledge.

Another advantage of micro-investements is that they can be taken at a much lower level than major investments: many of the decisions above can be taken by individuals or the team itself, without having to go through formal investment procedures.

Of course, the danger with micro-investments and local improvements is that local optimizations worsen global performance unless you use a “whole system” approach like Systems Thinking, the Theory of Constraints or Lean Thinking.

Big investments

“Classical” approaches tend to go for larger investments, in more upfront (analysis, design, architecture) work, frameworks, product lines… in an effort to look at the whole system and to avoid rework. In my experience, as long as you don’t get it completely wrong at the start, you will get where you need to be, in a timely and cost-effective way by building in and using regular feedback.

There is one area where it’s not wise to invest too little: in determining the real needs of the users/customers. What is the real problem? What is the best way of solving the problem? Software typically is only a small part of the solution, if it’s part of the solution at all. What else do you need? Is there value to created? Does the expected value exceed the expected cost? What is the required investement? Is the return justified? These questions should be asked before the project starts, and throughout the project. That’s what the “Find high value/low cost stories” in the systems diagram is all about.

Remember:

- A system that is not used has no value

- The cheapest system is the one not built

The wish to improve Velocity must be motivated by a drive internal to the team. It can’t be mandated from the outside. If you try to push velocity up, you might achieve that at the expense of your real goal: creating more value.

Who’s satisfied by achieving a ‘repeatable’ process? The only process worth having is an optimizing one. We need people who always want to do better. Because better is more fun

If we reward people based on how high their velocity is, we’re in fact rewarding people who work hard. Or who game the the system. Assuming we trust the developers to be honest with their estimates (and there are plenty of forces helping them stay honest), what’s wrong with rewarding people who work hard?

Well, working hard is not The Goal of our team. Our goal is to create value for the people who pay for our hard work. If we want to optimize our system, we should be very clear about our goal and keep it firmly in mind.

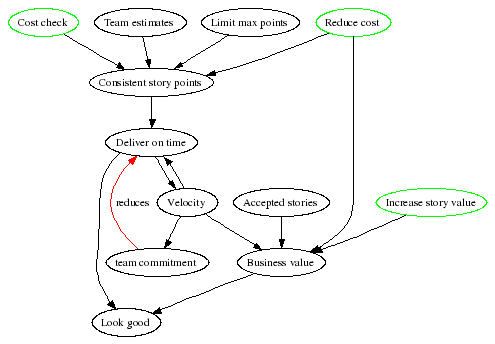

Fortunately, value is pretty easy to measure and track IF each story has a value estimate by the customer. Just like we can measure the effort we put into the release by counting the story points of accepted stories, we can count the business value points of the accepted stories. And that’s exactly what we track on the burn up/down chart. As this chart is displayed where everyone can see it, we’re always reminded of our goal. Our goal is to:

- Create the highest possible business value in each release…

- …for the lowest possible cost per release

Therefore, we should be rewarded on the amount of value we release. As long as it isn’t released and used, the software’s value is zero.

“Hey, that’s not fair! We’re being rewarded based on value estimates made by the customer? What if the customers don’t know the value? What if they’re wrong? What if they game the system to lower the value? What if…?” Well… you do trust your customer to do a good job, to be honest, to be competent and to make an honest mistake from time to time, don’t you? If not, stop reading this blog and do something about it!

Like story point estimates, business value estimates should be consistent. Developers are allowed and encouraged to ask for the reasoning behind the value estimate. This nicely balances with the way the customer asks for the reasoning behind the story points estimates of the developers. But it’s ultimately the customer’s reponsibility to get the value right.

Customers and developers work together to maximize story value, like they do to minimize story cost. Before and during the planning game they should look critically at all the stories. Can we do this in a simpler way? Is there a way to get more value out of this story or to get the value sooner?

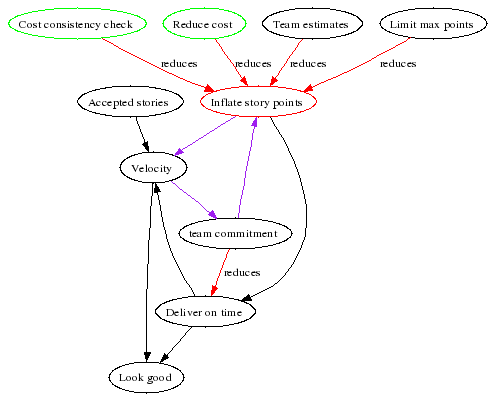

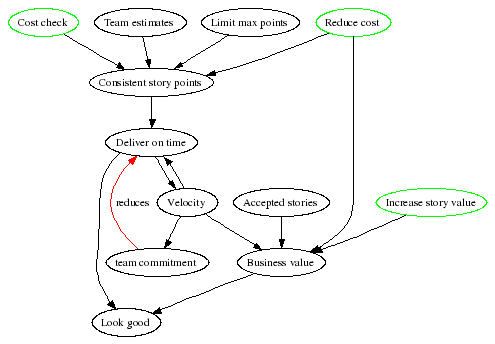

In this diagram we see that increasing velocity no longer makes you look good. Instead, producing more business value makes you look good. One of the ways to produce more business value is to genuinely increase the team’s velocity. Inflating story costs will not bring more value.

As in the previous diagram, if the team can deliver on time, their velocity will be a bit higher than planned. This means they will take on a little more work, commensurate with their increased velocity. But this reduces the odds that they will deliver on time next time, unless they find creative ways to increase their velocity.

We’ll see more about velocity in the next entry.

The previous entry described how to estimate using points and velocity. Can’t this system be mis-used by developers?

Yes…. Developers could assign artificially high estimates to stories, thereby making it seem as if they improved their velocity. Well, developers always could do this if they made estimates. There are some forces countering this:

- Are you going to give all your stories 5 points? You aren’t allowed to assign more points: if 5 is not enough you have to break down the story into smaller stories. Don’t you think the customer is going to ask questions if each story costs 5 points?

- A customer is allowed (and expected) to question the estimates: if stories A and B seem to have the same difficulty to the customer, but the developers assign a higher cost to B, the developers had better have a good explanation. If the explanation is based on the difficulty of the feature itself, the customer can only accept it. If the explanation is based on the technicalities of implementing the stories, this might be a “smell” that the design of the code is in need of refactoring. If the developers can’t explain the difference, they should re-estimate. Stories should be estimated consistently. A customer can verify this consistency.

- Story estimates are made by the team. The whole team would have to agree to “gaming the system”. Or at least, all of the most prominent and vocal members of the team. That’s quite difficult to keep up in the open, communication-rich environment of an agile team.

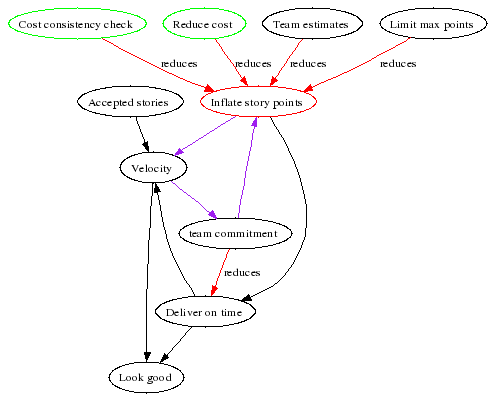

This system diagram shows how we can game the system to get a higher velocity, by working faster and more sloppily or by inflating our cost estimates. Sloppy work is kept in check by the acceptance tests the customer performs. Remember: only accepted stories count when calculating velocity.

Inflation can be kept under control by:

- The customer asking for the reasoning behind the estimates

- The customer and developers looking for lower-cost ways to get the same result

- The development team as a whole is accountable for the estimates

- Cost estimates are capped at 5

However, we still see a pernicious loop (in purple): if we inflate our estimates, we increase our velocity. If our velocity increases, the team has to commit to doing more work, which makes it unlikely to easily meet the deadline. Unless the estimates are inflated again…

We’ll see in the next entry how to break this loop.

What are story points?

Story points are an invented, team-local, measure of the innate complexity of a feature or story. How do we estimate using invented measures?

- In the first release:

- Sort the stories from easy to difficult. Create an absolute ordering by comparing each new story with those already on the table.

- Assign the easiest story 1 point. Assign the most difficult one 5 points. You may choose another range of numbers, but keep it small. This helps “level the load or “Heijunka“

- Divide the other stories into 5 groups, where the stories in each group have about the same difficulty. Give the stories in each group 1, 2, 3, 4 or 5 points.

- Done!

- In the next releases:

- Compare the new story with one or more existing stories, which have already been estimated (and preferably implemented) and seem to have the same difficulty. Give the new story the same number of points.

- Done!

The only important thing is that the stories are estimated consistently.

You still don’t know how many stories you can implement…

What’s velocity?

Velocity is another invented, team local measure of how much work the team can do. Velocity is the number of story points’ worth of stories the team can implement in one release. How do we know our team’s velocity?

- In the first release:

- We don’t know, so we’ll guess.

- Halfway the release we know a bit more: we’ll probably implement twice as many as we’ve already completed.

- In the next releases:

- We use the sum of the story points of all stories that were implemented and accepted by the customer in the previous release(s).

How many stories can you implement this release? Velocity points worth.

Why use this two step process?I feel it divides estimates into two separate factors:

- Story Points tells me the innate complexity of a feature. I don’t expect this value to change over time. If we want to get more done, we can work with the customer to look at the stories critically and see if there’s no way of simplifying the story (and thereby reducing its cost estimate), without significantly affecting the story’s value. Story points are quite imprecise and reflect that my estimates are also quite imprecise; they give none of that false sense of precision that estimations in man-hours/day give. Over a whole release, the imprecisions of the estimates mostly cancel out.

- Velocity tells if the team is speeding up or going slower if the story point estimates are consistent. If we want to get more done, we can look at various ways to improve the team’s performance. Velocity is a great way to quickly see the effects of various interventions. E.g. if we spend some time refactoring a particularly nasty piece of the code, I would expect our velocity to go up after the refactoring. If we skimp on code and test quality, I expect our velocity to go down.

Keeping the cost of stories low is a joint responsibility of customers and developers. Keeping velocity high is the responsibility of developers.

|