I’ve been going on a bit lately about the value I get from of performing “Root Cause Analysis” when I encounter a bug.

A simple algorithm to write perfect code

You don’t have to watch the presentation. The message is this: each time there’s a bug:

- Someone finds a problem and reports it to you

- Thank the reporter

- Reproduce the problem

- Add (at least) one failing test

- Correct the problem

- Rerun all tests and correct your correction until the tests pass

- Improve your tests

- Improve the way you write tests

- Look for similar problems. Goto 2

- Make this type of problem impossible

- Perform the actions that were identified during the Root Cause Analysis

But that’s just common sense!

We’re all agile lean continuously improving test driven extreme programmers, aren’t we? Doesn’t everybody do this?

Yes… but…

- It takes too much time

- We can’t do this for every bug because we’ve got too many bugs

- We don’t want to waste time on bugs, we prefer to spend time adding valuable features

- As Dijkstra said: “ program testing can be a very effective way to show the presence of bugs, but it is hopelessly inadequate for showing their absence.”

- <your objection here>

Just a little taste

The presentation tells the story of a team that performed a Root Cause Analysis. Here’s another team’s story:

Coach: Can we try a small Root Cause Analysis experiment?

Dev: Yes, but… we don’t have a lot of time.

Coach: Do you have one hour? We can timebox the experiment.

Dev: Sure, but… you can’t do anything useful in one hour.

Coach: Maybe you can’t; maybe we can.

Dev: ???

Coach: Have you seen any interesting bugs lately?

Dev: Well, I just fixed a bug. But it’s so trivial you won’t discover anything useful.

Coach: Thank you! Let’s see.

I love clear bug reports

First we look at the bug report:

|

|

GIVEN a swizzled foobar (*) WHEN I change its properties THEN I EXPECT to be offered a choice between 'A', 'B' or 'Z' for the status BUT I'm offered 'A', 'B' or 'C' (*) all names of domain objects have been changed to protect the innocent and the guilty |

Let’s apply the algorithm

Coach: have you thanked the reporter yet?

Dev: no…

Coach: Let’s do it now.

Dev: ok… <Calls product Manager>

Dev: Hi, I just called to thank you for reporting the bug about the property dialog of the swizzled foobar.

Product Manager: <surprised> OK…

Dev: The report was so clear the bugfix almost wrote itself. Thanks!

Product Manager: You’re welcome!

Coach: OK. We’ve now done the hardest part; Let’s do the next steps.

Next step: reproduce the problem. That’s quite easy: start up the application, select a foobar, swizzle it and open its property dialog. Look at the dropdown control for the status: it presents options ‘A’, ‘B’ and ‘C’.

Coach: We have a manual test procedure. Can we automate this test?

Dev: No. This is user-interface code. We’ve performed some experiments earlier and decided that it wasn’t worth the time and effort to create and maintain automated tests for the user interface.

Coach: OK.

Fix and Test

The fix was really simple

Buggy :

Fixed :

|

|

if (foobar.swizzled()) { status.add('Z') ; } else { status.add('C') ; } |

Rerun the application, perform the manual test: the correct options are now offered.

Result! Bug fixed! High Five! Job well done.

Hmmmm… The fix is indeed simple and the bug is now gone. But that IF is worrying. I’ve seen (and had to maintain) code that was riddled with IFs: each time a bug was detected, the developers added an IF for the specific conditions and expected outcomes described in the bugreport. Let’s add a post-RCA action: let’s read and discuss the ANTI-IF campaign.

Dev: You see now: this is a trivial bugfix, there’s nothing to learn here.

Coach:Maybe. We’ve only spent 10 minutes yet. Let’s see the rest of this code

Show me the code

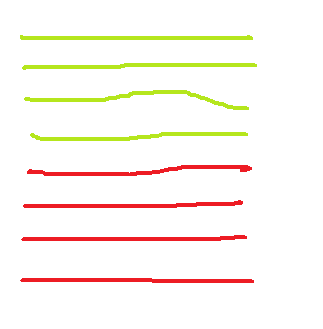

If I take off my glasses, squint a bit and look at the code, this is what I see:

|

|

void showProperties(Foobar foobar) { |

Red lines are user interface code, calls to the UI toolkit. Green lines are simple java, C#, ruby… UI-independent code.

Guess where the bug is… In the Green code. But we can’t test it, because we can’t test user interface code. So, that doesn’t help us.

A small step for a programmer…

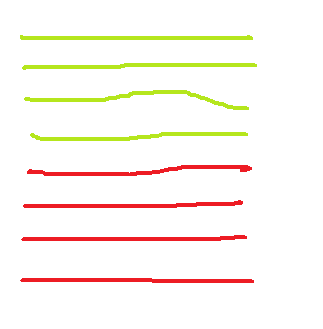

We don’t have any tests, so any refactoring is going to be risky. Let’s do some simple, safe refactoring: let’s move all the Green and Red code together.

Now the code looks something like this:

|

|

void showProperties(Foobar foobar) { |

Exactly the same code, it does exactly the same thing. What have we achieved? Nothing. Yet.

Another small step

Now that we’ve got all the green code together, what does it do? Essentially, it fills in a number of variables (like the list of values for the status), which are filled up with values to put into the UI controls.

Let’s do another small, safe refactor:

- Create a new class. I don’t have a good name yet, let’s call it “Stuff” for the moment

- Move every local variable in the green code into the Stuff class

- Create an instance of Stuff in the function

- Fill in all fields of the instance, just like you fill in the local variables

Again, nothing really changes. We’ve just collected all local variables in one object.

Our code now looks something like this.

|

|

void showProperties(Foobar foobar) { // GREEN Stuff stuff = new Stuff() ; stuff.status.add('A') ; stuff.status.add('B') ; if (foobar.swizzled()) { stuff.status.add('Z') ; } else { stuff.status.add('C') ; } .... // RED statusDropdown.setOptions(stuff.status) ; } |

Finally, a real refactoring

Now the code has been reorganised, we can finally do a real refactoring, still taking small steps because we’re working without a safety net.

Let’s extract the green and red code in separate methods. Our code now looks like this:

|

|

void showProperties(Foobar foobar) { // GREEN Stuff stuff = prepare(foobar) ; // TODO: find a better name // RED display(stuff) ; } |

Note to self: “Stuff” and “prepare” aren’t very descriptive. That’s probably because we don’t understand the code well enough yet. Let’s revisit naming when we understand better.

We’re now 30 minutes into the Root Cause Analysis

A giant leap for testing

Aha! We now have a method “prepare” which contains the bug AND doesn’t depend on the UI. Can we test the code now? Yes we can!

|

|

void swizzledFoobarsHaveZStatus() { // GIVEN a swizzled foobar Foobar foobar = makeAFoobarSomehow() ; foobar.swizzle() ; // WHEN I change its properties Stuff stuff = foobarPropertyDialog.prepare(foobar) ; // THEN I EXPECT to be offered a choice between 'A', 'B' or 'Z' for the status assertEquals(3,stuff.status.getSize()) ; assertEquals('A',stuff.status.get(0)) ; assertEquals('B',stuff.status.get(1)) ; assertEquals('Z',stuff.status.get(2)) ; } |

This test fails before the fix. It succeeds after the fix. We now have an automated regression test for this bug.

Improve your tests

This test raises a lot of questions:

- What if the foobar isn’t swizzled? Are the options ‘A’, ‘B’ and ‘C’ correct in this case? Should we add a test?

- Are there any other special cases for status depending on the properties of a Foobar?

- Are there any other fields that depend on the properties of the Foobar?

- Are there any other properties of the domain that are important?

- ….

This could be the start of a really great conversation with the Product Manager and testers!

Result: we’re 45 min into the RCA and we’ve written an automated regression test of that bug in supposedly untestable code.

Names

Those stupid names “Stuff” and “prepare” irritate me. Now that we’ve got automated tests for this code, we can refactor more audaciously.

What is “Stuff”? It contains the data as its shown in the View of the Foobar property dialog. It’s a ViewModel. Let’s rename it to FoobarProperties.

What does “prepare” do? It creates a FoobarProperties and stuffs values into it. What does FoobarProperties do? Nothing, it just sits there and contains these values. We might as well move the code from prepare into FoobarProperties:

|

|

void showProperties(Foobar foobar) { FoobarProperties properties = new FoobarProperties(foobar) ; display(properties) ; } |

And now the unit test becomes a unit test of FoobarProperties, a pure processing class, no longer of the mixed processing/UI class FoobarPropertyDialog:

|

|

void swizzledFoobarsHaveZStatus() { // GIVEN a swizzled foobar Foobar foobar = makeAFoobarSomehow() ; foobar.swizzle() ; // WHEN I change its properties FoobarProperties properties = new FoobarProperties(foobar) ; // THEN I EXPECT to be offered a choice between 'A', 'B' or 'Z' for the status assertEquals(3,properties.status.getSize()) ; assertEquals('A',properties.status.get(0)) ; assertEquals('B',properties.status.get(1)) ; assertEquals('Z',properties.status.get(2)) ; } |

Improve the way you test

Now that we have a unit test for FoobarProperties we can add more tests for different cases. Looking at those tests we’ll see that some properties of a Foobar are independent of its state. E.g. we always have a status ‘A’ and ‘B’. We can include those invariants in our tests. We’ll talk with the Product Manager first and together extend the testcase.

We can now see that we consider too much code as “UI code” and therefore not testable. If we separate ViewModel code from View code, we can cover more code with fast unit tests.

Look for similar bugs

Coach: are there other places in the UI where we display or change the status of a Foobar?

Dev: Yes… Maybe 3-4 screens and dialogs

Coach: Did we make the same mistake there?

Dev: Let’s quickly test the application. It would be quite embarassing if we got the same bug report for another screen…

Dev: Ooops! We made the same mistake in one other dialog. The other dialogs are OK.

Coach: Let’s note the dialog to be fixed and move on, because we’ve only got a few minutes left in our timebox and I’d like to do the next step first before fixing this bug.

Dev: What more can we do?

Make this type of problem impossible

Now, before we fix the buggy dialog, we’ll apply the same safe refactorings to make the code testable, add tests to demonstrate the bug (and serve as regression tests once the bug is fixed) and then fix the bug. If we consistently test our ViewModel code and validate our ViewModel behaviour with the Product Manager, we’re less likely to overlook certain cases.

The fact that this bug appears in some dialogs and not in others tells us that we’re looking at different code. Some code takes into account the “swizzledness” of a Foobar, some code doesn’t.

But we can do better: why is this code duplicated? Ideally, we’d want one instance of the code that decides which status options to show. Otherwise, if the rules change (or more likely, if we discover we’ve missed an existing rule) we’ll have to remember to update all the pieces of code that determine status options of a Foobar. So, once we’ve extracted and fixed the ViewModels from both our buggy Dialogs, we’ll extract the common code that determines the status options. Of course, this common code will have unit tests that verify this common behaviour.

Afterwards, we’ll do the same to the Dialogs that were implemented correctly. We can do this refactoring gradually, once the two bugs have been corrected. Ideally, we’ll do this if we have to change the code of those Dialogs anyway, to fix a bug or add a feature. Even if we don’t touch these classes, we’ll make sure we refactor them within X time, so that we don’t have to remember these “dangling” refactorings too long.

Some developers have taken the “swizzledness” into account, some haven’t. Let’s share our findings with the team and the Product Manager. We may have to organise a session to clarify some of the subtleties of our domain. Once they’re clear we can encode and document them as automated regression tests, so that we get a failing test next time we forget to take into account one of those subtleties.

Dev: Hey, coach, your hour is up! Shall we get a coffee?

Coach: Great! Let’s step away from the keyboard 🙂

Looking back (over a cup of coffee)

What have we done in 60 minutes?

- Isolated code that contains a bug from “untestable” code

- Added an automated test that shows the bug and can be run as a regression test after the fix

- Simplified the code by separating the ViewModel from the View

- Identified a new class of testable code, the ViewModels

- Found another bug before anyone noticed

- We know how to make code testable and better factored for this type of bug

- We know how to avoid this type of problem and be ready for changes in the domain

Looking forward

During the Root Cause Analysis we noted a number of actions to be taken. We make each of these tasks visible (for example, by adding them to the Kanban board):

- Extend the FoobarTest to cover more cases, in collaboration with the Product Manager and testers

- Test and fix the second Foobar status bug

- Make the code that determines the allowed status values common between the two bugfixed dialogs. Validate tests with the Product Manager

- Refactor the other dialogs that display Foobar statuses so that they use the common code

- List the subtleties of the domain and organise a knowledge sharing session with the Product Manager

- Ask the team to review and discuss the ANTI-IF campaign

- Let’s read some more about ViewModel architectures. If it looks useful we’ll make this a standard way of designing our user interfaces. If we do, we’ll have to see how we transition gradually to such an architecture.

Dev: Well… I never expected so many issues and ideas to come out of a Root Cause Analysis of such a simple bugreport. Let’s hope we don’t do too many of those Root Cause Analyses, because they generate a lot of work.

Coach: ????

Dev: That was a joke, coach. 🙂 I’m sold. Now, let’s get back and fix that bug we found.